In this post I will go through how to work with files in PowerShell, specifically how to read and write files. I will go through a handful of different cmdlets you can use to read files, as well as save information down to a file. As with most things PowerShell, it’s actually quite easy!

We have several cmdlets available to us for interacting with files in PowerShell. These are the ones I will be discussing in this post:

- Get-Content

- Set-Content

- Add-Content

- Out-File

- Export-Csv

- Import-Csv

- Export-Clixml

- Import-Clixml

And if none of these can get the job done, you also have the option of using .NET directly. But lets start by going through each of these cmdlets and see what they can do.

Get-Content

This cmdlet can be used to get content from a file.

Get-Content file.txt Get-Content -Path .\file.txt

In it’s simplest form, this is how you use Get-Content. You supply a path to a file by using the Path parameter. This parameter is positional so you can omit the parameter name entirely if you make sure that the path is the first value after the cmdlet name. The output of the file is displayed on the screen.

The Path parameter even support wildcards, so you can do the following:

Get-Content .\file1*.txt

This command will read the contents of all files in the current path with a name that starts with ‘file1’ and have an extension of ‘txt’, and display it on the screen.

On top of this, the Path parameter also accepts data from the pipeline (by property name). What does this mean? If you run a separate command that outputs an object with a Path parameter and pipe this into Get-Content, the value of the Path parameter will automatically be filled out from the object coming through the pipeline. This is easiest shown using an example:

Get-ChildItem demo*.txt | Get-Content

Get-ChildItem is often used to list files on disk. If it’s not immediately familiar to you, it’s probably more know for its alias ‘ls’ or ‘dir’. This cmdlet outputs an object of the type ‘System.IO.FileInfo’, and the FileInfo object have indeed a Path parameter. The value stored in Path is automatically piped over to the Path parameter of the Get-Content cmdlet, and as you see in the example, we don’t have to supply any data to the Path parameter of Get-Content.

But Get-Content supports several other interesting parameters as well.

Exclude

This parameter lets you exclude certain files from the path, and supports wildcards.

Get-Content *.txt -Exlude VeryLongFile.txt Get-Content *.txt -Exlude VeryLong*

Here we are getting all text files in the current directory, but we are omitting either the specific file ‘VeryLongFile.txt’ (the first example), or all files starting with ‘VeryLong’ (second example).

Include

Similarly we can choose to include certain files like so:

Get-Content *.txt -Include Demo*

Here we are setting up a broad filter on the path (all txt-files) but are narrow it down using the include parameter to only files starting with ‘Demo’.

Filter

Using filter you can define how to filter the results from the Path parameter, much like Exclude and Include, but according to the help information it’s much more efficient because the filter is applied by the provider (in this case the file system provider) while getting the objects, instead of PowerShell filtering the objects after they have been retrieved.

Get-Content *.txt -Filter Demo*

This example is similar to the Include example, but are using the Filter parameter instead, to only show files staring with the word ‘Demo’ and have an extension of ‘txt’.

ReadCount

The ReadCount parameter lets you control how many lines are read before being sent through the pipeline. The default behaviour is for Get-Content to send each line it gets through the pipeline. By setting ReadCount to 0, all lines are read before being sent.

Lets look at some examples that explains how this works. I have created a text document with 10 lines of text for these examples.

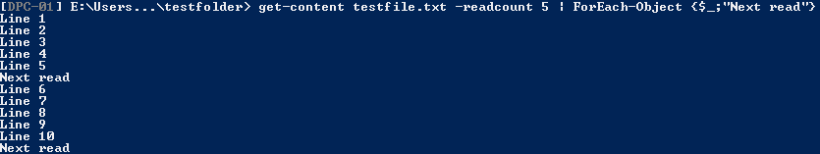

Get-Content testfile.txt | Foreach-Object {$_; "Next Read"}

Here you see the result of the default ReadCount where each line is read and sent through the pipeline. Lets set the ReadCount to 5 and see what happens.

Get-Content testfile.txt -ReadCount 5 | Foreach-Object {$_; "Next Read"}

As you see, Get-Content now reads 5 lines before sending it through the pipeline.

TotalCount

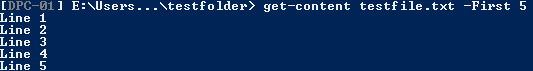

By using TotalCount (or its alias ‘First’ or ‘Head’) you can define how many lines are read from the top of the file(s).

Get-Content testfile.txt -TotalCount 5 Get-Content testfile.txt -First 5

Here we are reading only the first 5 lines of the file ‘file.txt’.

Tail

Tail is similar to TotalCount, but is used to read from the bottom of the file instead. You can use the alias ‘Last’ instead if you want to.

Get-Content testfile.txt -Tail 5 Get-Content testfile.txt -Last 5

You can probably guess what this does! Yes, you are right, it’s reading the last 5 lines from ‘file.txt’.

Set-Content

This cmdlet can be used to write content to a file.

Set-Content testfile.txt 'this is a test' Set-Content -Path .\testfile.txt -Value 'this is a test'

This example shows how to write a simple string to a file. You use the Path parameter to point to a file (it will be automatically created if it doesn’t exist) and the Value parameter for the information you want to be written to it. As you see from this example, both the Path and Value parameters are positional, and can be omitted if used in the correct order. Path must be first, and Value second.

Both the Path and Value parameters also supports getting values from the pipeline.

'this is a test' | Set-Content testfile.txt ls testfile.txt | Set-Content -Value 'this is a test'

Note that when Path is passed in through the pipeline, you have to specify the Value parameter by name, or it will not work, since Value have a positional value of 2. Be also aware of the fact that if you use Set-Content on an already existing file, it will write over any data that is already present in it.

Force

By adding the Force parameter, you are able to write to a read-only file.

Include, Exclude, Filter

Set-Content supports Include, Exclude and Filter. They work the same as for Get-Content though, so I won’t get into any details of them here.

Add-Content

Whereas Set-Content will replace existing data in a file, you can use Add-Content to append data to an existing file. Other than that, it supports much the same parameters as Set-Content.

'another line' | Add-Content testfile.txt

Out-File

Out-File is another cmdlet you have at your disposal for writing data to a file. The main difference between this and Set/Add-Content is that Out-File is made specifically for sending data to a file.

Out-File -FilePath testfile.txt -InputObject 'This is a test'

As you see, the parameters we use differ some from Add/Set-Content. Here we use FilePath instead of Path, and InputObject instead of Value. Another difference is that the FilePath parameter is not accepting pipeline input, only InputObject.

'This is a test' | Out-File testfile.txt

This will give us the same result as the last example. Notice how I can omit using the FilePath parameter name, since this parameter have a positional value of 1.

Lets go through some of the other parameters of Out-File.

Append

The default behaviour of Out-File is to overwrite the contents of a file, but by using the Append parameter, you will instead add the data to the end of the file.

'Line 2' | Out-File testfile.txt -Append

NoClobber

When using the NoClobber parameter, a terminating error will occur if the file already exists. This can come in handy if you only want to write to a file if it doesn’t already exist, or if you want to perform some additional code in case a file exists.

'Line 2' | Out-File testfile.txt -NoClobber

Encoding

This parameter lets you specify the type of character encoding you want used when writing the file. You can choose between ‘Unicode’, ‘UTF7’, ‘UTF8’, ‘UTF32’, ‘ASCII’, ‘BigEndianUnicode’, ‘Default’ and ‘OEM’.

'Text' | Out-File testfile.txt -Encoding 'UTF8'

Force

As with Add/Set-Content, the Force parameter lets you write to a read-only file.

Export-Csv

The Export-Csv cmdlet is a specialised cmdlet for writing CSV files. It takes an object as input and converts it to a series of comma-separated strings and writes it to file.

Get-Service | Export-Csv test.csv

As shown in this example, we are taking the object created by the Get-Service cmdlet, and saving it as a CSV file called ‘test.csv’. The InputObject parameter is used for the data inn, and as shown here, we are piping this through the pipeline. In most cases this is how you have to do it when working with Export-Csv. If you look up InputObject in the help information for Export-Csv, you see that it is of type PSObject, but not an array of PSObjects. That is essential to know about. If we were to take the output of the Get-Service cmdlet and pass it through the parameter instead of through the pipeline, we would not get the desired result! The reason it works from the pipeline is in the way data is processed coming through it; if an array is piped through the pipeline, it is automatically “un-wrapped”. When the data is coming in through the parameter, the object the cmdlet is working with is the array itself.

Lets look at some of the other parameters supported by this cmdlet.

Delimiter

This parameter lets you choose which character to be used as value separator.

Get-Service | Export-Csv test.csv -Delimiter ';'

Here we are saving the results of Get-Service as a CSV file using ‘;’ as the separator.

NoTypeInformation

This is a parameter you would normally like to use when writing CSV files. Without this parameter the data type of the object being exported will be written as the first line in the file, which is rarely needed.

Get-Service | Export-Csv test.csv -NoTypeInformation

UseCulture

By using the UseCulture parameter, Export-Csv will get the separator character from the current culture.

Force

Force lets you overwrite the specified file without prompting.

Import-Csv

If you want to read a CSV file, Import-Csv will let you do this. If all you wanted was that, you might as well just have used Get-Content though. The reason you would want to use Import-Csv instead is because it won’t just read the file, it will also convert it into an object. As you know, PowerShell is all about the objects. So having the input as an object mean we can do further processing on it really easy.

Import-Csv file.csv

Here we are reading the CSV file ‘file.csv’ and showing it on the screen after it have been converted into an object. You will see that it doesn’t look anything like a CSV file anymore – and that’s because it isn’t. It is now a proper PowerShell object. Lets look at some of the other parameters it supports.

Delimiter

Like its counterpart, Import-Csv supports the Delimiter parameter, letting you choose the delimiting character that is used for value separation in the file.

Encoding

You can also specify what text encoding was used in the CSV file by using the Encoding parameter.

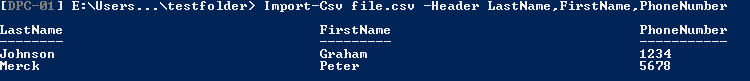

Header

The Header parameter lets you define your own column headers in the object created by the cmdlet. This is useful if you have a CSV file with no header information in it, just raw data.

Lets say you have the following csv:

"Johnson","Graham","1234" "Merck","Peter","5678"

As you see, we have a CSV file without any headers.

Import-Csv file.csv -Header LastName,FirstName,PhoneNumber

By using the Headers parameter when importing the data, we are supplying the header information to the cmdlet manually.

UseCulture

Like with Export-Csv, the UseCulture parameter will use the delimiter character as defined in the current culture.

Export-Clixml

The last two cmdlets I’m going to discuss in this post, Export-Clixml and Import-Clixml, are a bit different than the rest. It is a very specialised pair of cmdlets used to serialise PowerShell objects to file. The format used is XML as you could probably guess from the cmdlet name.

Get-Service | Export-Clixml services.xml

Here we are getting service information from the local computer and saving the resulting object to a file called ‘services.xml’. Lets explore some of the supported parameters of this cmdlet.

Depth

The Depth parameter let you define how many levels of contained objects are used in the XML. The default value is 2.

Encoding

Used to specify the text encoding used to write the file. The usual encoding standards are supported, and the default value is ‘Unicode’.

Force

Lets you write to a read-only file.

NoClobber

Similar to the Out-File cmdlet, when NoClobber is used, PowerShell will generate a terminating error if a file already exists with the same name.

Import-Clixml

Import-Clixml are used to read serialised objects and re-create the object it represents.

$services = Import-Clixml services.xml

Here we are reading data from the file ‘services.xml’ and re-creating the object, saving it in the ‘services’ variable.

Set-Content vs Out-File

So, we have two cmdlets for writing content to a file. Which one should you use? What is the difference between them? Lets use some time to explain.

The biggest difference between them is how they handle formatting when writing to a file. While Set-Content (and Add-Content) are setting (and adding) content as objects, Out-File will preserve the formatting you see on the screen. It works the same as using the redirect character ‘>’. Lets demonstrate this:

Get-Service | Set-Content services1.txt Get-Service | Out-File services2.txt

Here we are getting service information and writing it down to a file, using both Set-Content and Out-File. The differences in these cmdlets becomes quite obvious when we look at the files created.

So what is happening here? As you see, the file created by Out-File is mirroring the output you would get by using the Get-Service cmdlet directly. It is taking the formatting data and writing the data to the file the same way. That is clearly NOT how Set-Content is working though. What you see in the file is the type name of the objects contained in the array returned from the Get-Service cmdlet, so it’s clearly trying to write the objects them self into the text file, and failing that is defaulting to write the type instead (Export-Csv does the same thing for objects). I have read somewhere that what it’s doing is using the ToString method to generate the strings written to the file, but that doesn’t really make any sense in this example, as the ToString method would mean the service name would be used, and clearly that is not the case.

To be able to get a similar result as for Out-File, you could do it like this:

Get-Service | Out-String | Set-Content services3.txt

As you see, the results are now as expected. We use Out-String to generate the strings that will be written to file by Set-Content.

.NET

I’m not going into great details of how to use .NET classes to work with files (I’ll save that for another blog post), but I can quickly point you in the right direction if you want to try it out. You would want to take a look at the System.IO namespace which contains a handful of classes for reading and writing to files.

Final Notes

I have gone through the most common cmdlets for working with files in PowerShell. There exists of course a lot more cmdlets that lets you interact with files or folders in some way. If you want to get all commands that supports the Path parameter, you could get that by running the following:

Get-Command | Where-Object {$_.Parameters.Keys -contains 'Path'}

I hope you have enjoyed this post. Please let me know if you have questions or have spotted any errors. Until next time…

Excellent overview. I had not realized the differences between Set-Content and Out-File. Would love to see you do a similar exposé on ConvertFrom-String!

LikeLike

Hi Ryan. I’m sorry I’m late replying to your comment. I’m glad you found the article helpful. I’m a bit unsure what you mean by ConvertFrom-Sting though. There doesn’t seem to be any cmdlet with that name. Do you mean ConvertFrom-SecureString or ConvertFrom-StringData?

LikeLike